As organizations embrace AI-assisted tools to boost productivity and streamline development, they must also address the critical security implications. AI-assisted development accelerates innovation, but it also introduces new security challenges when AI usage is not governed or attributed.

Without visibility into how AI tools are used by developers, organizations struggle to enforce security standards, licensing requirements, and compliance policies across the SDLC. When developer and AI actions cannot be traced, risks introduced during development often go undetected until they surface as incidents or compliance failures.

Common security risks associated with AI-assisted development include:

Insecure AI-Generated Code

AI tools may generate code that does not adhere to secure coding standards, introducing vulnerabilities such as injection flaws or insecure patterns.AI Code Compliance Gaps

AI-generated code may violate licensing requirements, intellectual property policies, or internal development standards when usage is not governed.Data Exposure and Leakage

Sensitive information may be exposed through AI prompts or inadvertently embedded in AI-generated code.Unattributed AI Usage

When AI contributions are not linked to specific developers, accountability and remediation clarity are lost.

The risks associated with generative AI tools are not hypothetical. Public incidents have demonstrated that unmanaged AI usage can lead to security exposure, licensing risk, and data leakage—reinforcing the need for developer-aware governance of AI-assisted development:

Samsung Data Leak via ChatGPT (2023): Samsung employees accidentally leaked sensitive data while using ChatGPT, highlighting the risks of inputting proprietary information into AI tools.

Amazon’s Confidentiality Warning on ChatGPT (2023): Amazon advised employees against sharing sensitive information with AI platforms, emphasizing the potential for unintentional data breaches.

GitHub Copilot and Licensing Risks (2023): Copilot’s generation of code snippets from public repositories, including GPL-licensed code, created legal and security risks for organizations, potentially exposing proprietary projects to vulnerabilities.

While AI tools transform coding workflows, they also bring security challenges that many organizations struggle to address effectively. Archipelo supports AI coding security by making AI-assisted development observable—linking AI tool usage, AI-generated code, and resulting risks to developer identity and actions across the SDLC.

How Archipelo Supports AI Coding Security:

AI Code Usage & Risk Monitor

Monitor AI tool usage across the SDLC and correlate AI-generated code with security risks and vulnerabilities.Developer Vulnerability Attribution

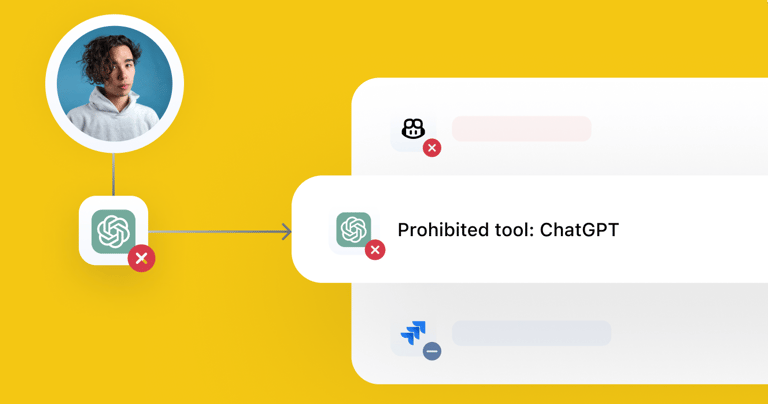

Trace vulnerabilities introduced through AI-assisted development to the developers and AI agents involved.Automated Developer & CI/CD Tool Governance

Inventory and govern AI tools, IDE extensions, and CI/CD integrations to mitigate shadow AI usage.Developer Security Posture

Generate insights into how AI-assisted development impacts individual and team security posture over time.

The integration of AI into software development is both a revolution and a responsibility. AI-assisted development requires the same discipline applied to any other part of the SDLC: visibility, attribution, and governance.

When AI usage is observable and attributed, organizations can innovate responsibly while reducing security and compliance risk.

Archipelo helps organizations navigate the complexities of AI in software development, ensuring that AI tools contribute to secure, innovative, and resilient applications. Archipelo delivers developer-level visibility and actionable insights to help organizations reduce AI-related developer risk across the SDLC.

Contact us to learn how Archipelo supports secure and responsible AI-assisted development while aligning with DevSecOps principles.